Aloof as stardust rains

Are memory dim prints

Eliding tensed face

By shadows within of

All conquering space that

Inly trusts each friend

Of madness—whose embrace

Accept with no delays

To take one final look

Before you turn Rose ways.

A rather rare meter in English, but much easier once you let enjambments in.

Précis

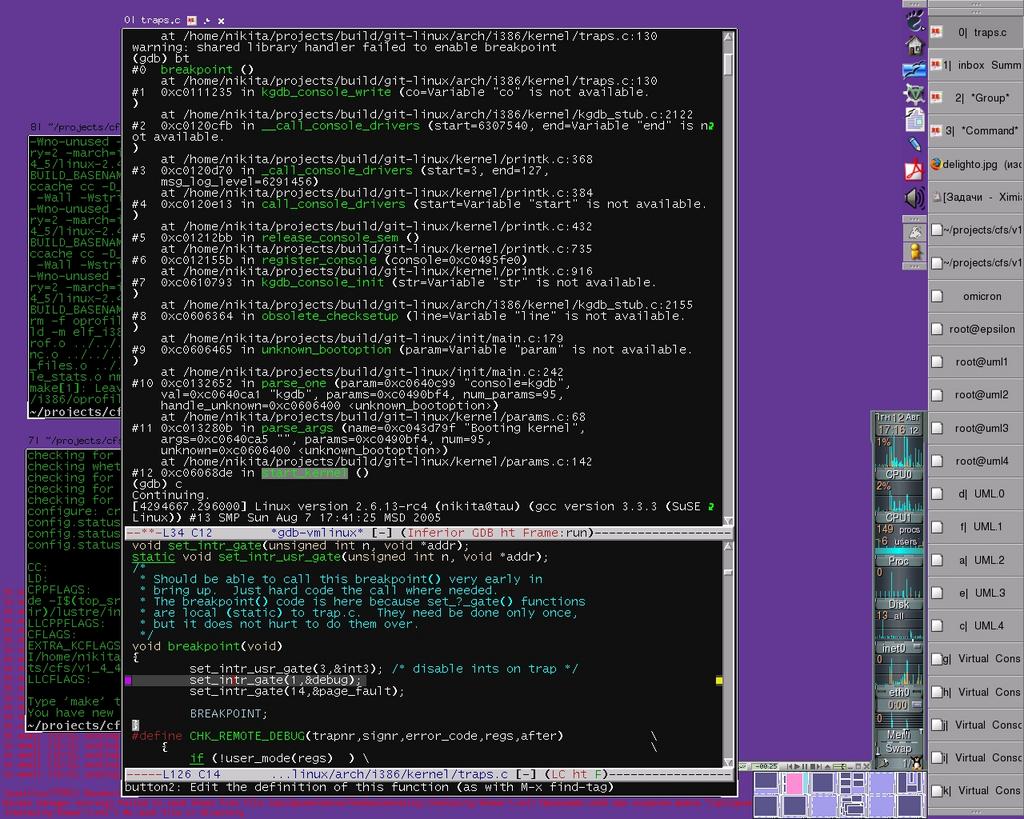

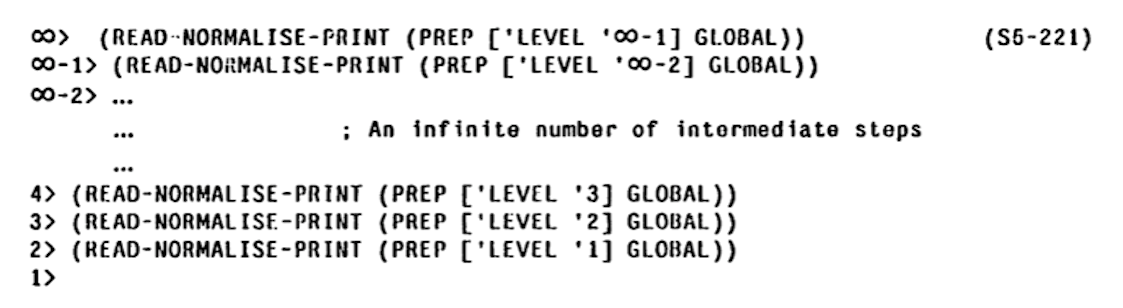

3-LISP is a dialect of LISP designed and implemented by Brian C. Smith as part of his PhD. thesis Procedural Reflection in Programming Languages (what this thesis refers to as "reflection" is nowadays more usually called "reification"). A 3-LISP program is conceptually executed by an interpreter written in 3-LISP that is itself executed by an interpreter written in 3-LISP and so on ad infinitum. This forms a (countably) infinite tower of meta-circular (v.i.) interpreters. reflective lambda is a function that is executed one tower level above its caller. Reflective lambdas provide a very general language extension mechanism.

The code is here.

Meta-circular interpreters

An interpreter is a program that executes programs written in some programming language.

A meta-circular interpreter is an interpreter for a programming language written in that language. Meta-circular interpreters can be used to clarify or define the semantics of the language by reducing the full language to a sub-language in which the interpreter is expressed. Historically, such definitional interpreters become popular within the functional programming community, see the classical Definitional interpreters for higher-order programming languages. Certain important techniques were classified and studied in the framework of meta-circular interpretation, for example, continuation passing style can be understood as a mechanism that makes meta-circular interpretation independent of the evaluation strategy: it allows an eager meta-language to interpret a lazy object language and vice versa. As a by-product, a continuation passing style interpreter is essentially a state machine and so can be implemented in hardware, see The Scheme-79 chip. Similarly, de-functionalisation of languages with higher-order functions obtains for them first-order interpreters. But meta-circular interpreters occur in imperative contexts too, for example, the usual proof of the Böhm–Jacopini theorem (interestingly, it was Corrado Böhm who first introduced meta-circular interpreters in his 1954 PhD. thesis) constructs for an Algol-like language a meta-circular interpreter expressed in some goto-less subset of the language and then specialises this interpreter for a particular program in the source language.

Given a language with a meta-circular interpreter, suppose that the language is extended with a mechanism to trap to the meta-level. For example, in a LISP-like language, that trap can be a new special form (reflect FORM) that directly executes (rather than interprets) FORM within the interpreter. Smith is mostly interested in reflective (i.e., reification) powers obtained this way, and it is clear that the meta-level trap provides a very general language extension method: one can add new primitives, data types, flow and sequencing control operators, etc. But if you try to add reflect to an existing LISP meta-circular interpreter (for example, see p. 13 of LISP 1.5 Programmers Manual) you'd hit a problem: FORM cannot be executed at the meta-level, because at this level it is not a form, but an S-expression.

Meta-interpreting machine code

To understand the nature of the problem, consider a very simple case: the object language is the machine language (or equivalently the assembly language) of some processor. Suppose that the interpreter for the machine code is written in (or, more realistically, compiled to) the same machine language. The interpreter maintains the state of the simulated processor that is, among other things registers and memory. Say, the object (interpreted) code can access a register, R0, then the interpreter has to keep the contents of this register somewhere, but typically not in its (interpreter's) R0. Similarly, a memory word visible to the interpreted code at an address ADDR is stored by the interpreter at some, generally different, address ADDR' (although, by applying the contractive mapping theorem and a lot of hand-waving one might argue that there will be at least one word stored at the same address at the object- and meta-levels). Suppose that the interpreted machine language has the usual sub-routine call-return instructions call ADDR and return and is extended with a new instruction reflect ADDR that forces the interpreter to call the sub-routine ADDR. At the very least the interpreter needs to convert ADDR to the matching ADDR'. This might not be enough because, for example, the object-level sub-routine ADDR might not be contiguous at the meta-level, i.e., it is not guaranteed that if ADDR maps to ADDR' then (ADDR + 1) maps (ADDR' + 1). This example demonstrates that a reflective interpreter needs a systematic and efficient way of converting or translating between object- and meta-level representations. If such a method is somehow provided, reflect is a very powerful mechanism: by modifying interpreter state and code it can add new instructions, addressing modes, condition bits, branch predictors, etc.

N-LISP for a suitable value of N

In his thesis Prof. Smith analyses what would it take to construct a dialect of LISP for which a faithful reflective meta-circular interpreter is possible. He starts by defining a formal model of computation with an (extremely) rigorous distinction between meta- and object- levels (and, hence, between use and mention). It is then determined that this model can not be satisfactorily applied to the traditional LISP (which is called 1-LISP in the thesis and is mostly based on Maclisp). The reason is that LISP's notion of evaluation conflates two operations: normalisation that operates within the level and reference that moves one level down. A dialect of LISP that consistently separates normalisation and reference is called 2-LISP (the then new Scheme is called 1.75-LISP). Definition of 2-LISP occupies the bulk of the thesis, which the curious reader should consult for (exciting, believe me) details.

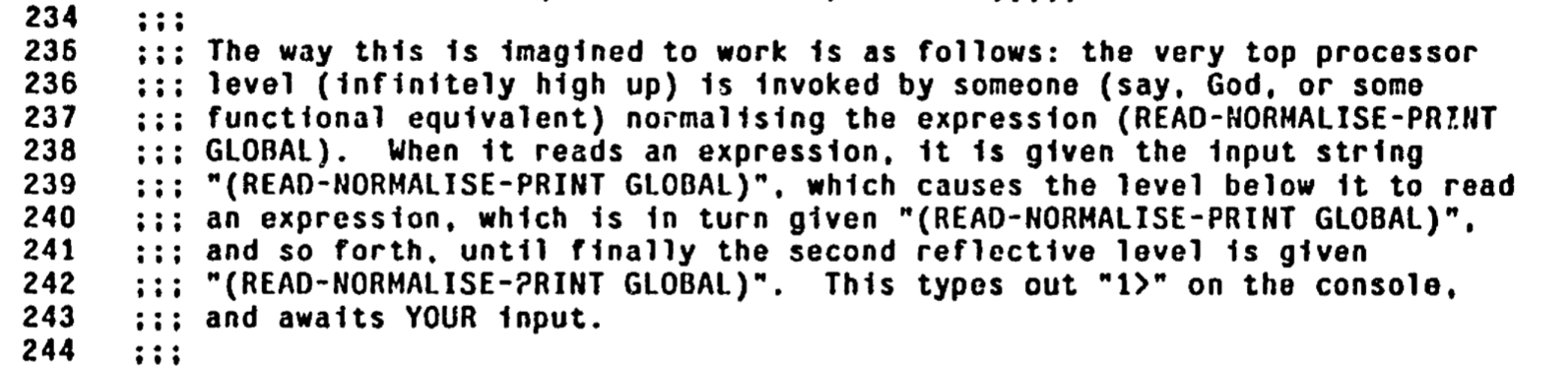

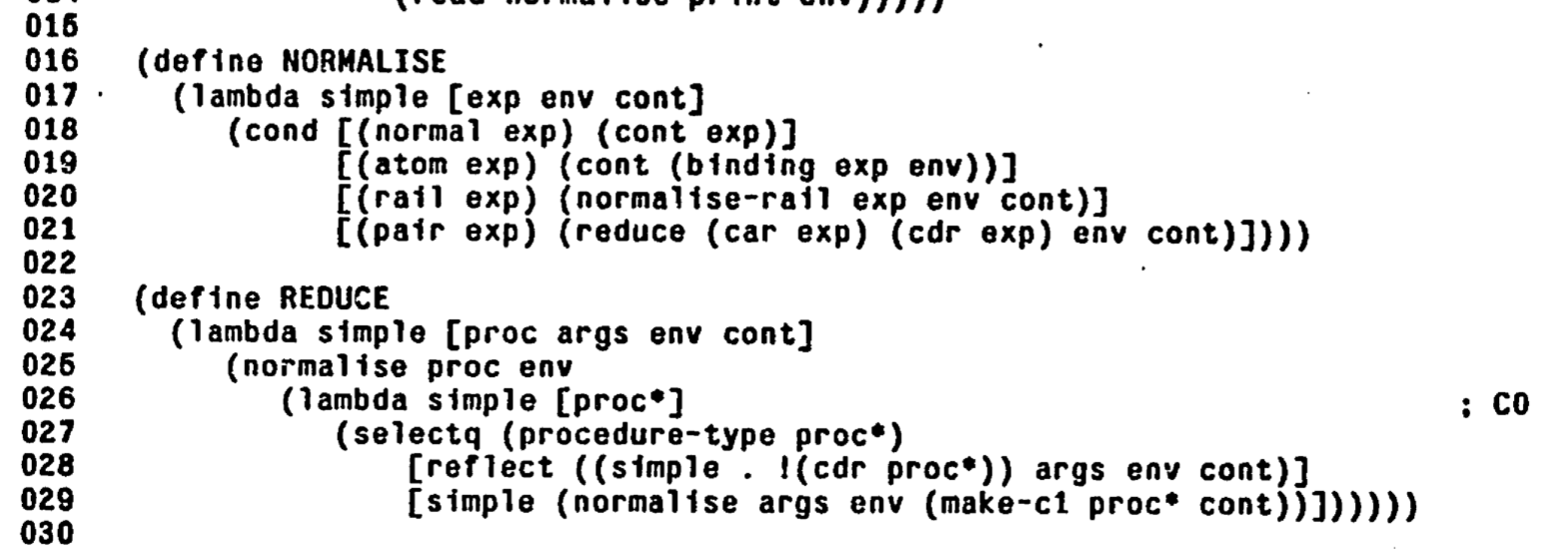

Once 2-LISP is constructed, adding the reflective capability to it is relatively straightforward. Meta-level trap takes the form of a special lambda expression:

(lambda reflect [ARGS ENV CONT] BODY)When this lambda function is applied (at the object level), the body is directly executed (not interpreted) at the meta-level with ARGS bound to the meta-level representation of the actual parameters, ENV bound to the environment (basically, the list of identifiers and the values they are bound to) and CONT bound to the continuation. Environment and continuation together represent the 3-LISP interpreter state (much like registers and memory represent the machine language interpreter state), this representation goes all the way back to SECD machine, see The Mechanical Evaluation of Expressions.

Here is the fragment of 3-LISP meta-circular interpreter code that handles lambda reflect (together with "ordinary" lambda-s, denoted by lambda simple):

Implementation

It is of course not possible to run an infinite tower of interpreters directly.

3-LISP implementation creates a meta-level on demand, when a reflective lambda is invoked. At that moment the state of the meta-level interpreter is synthesised (e.g., see make-c1 in the listing above). The implementation takes pain to detect when it can drop down to a lower level, which is not entirely simple because a reflective lambda can, instead of returning (that is, invoking the supplied continuation), run a potentially modified version of the read-eval-loop (called READ-NORMALISE-PRINT in 3-LISP) which does not return. There is a lot of non-trivial machinery operating behind the scenes and though the implementation modestly proclaims itself extremely inefficient it is, in fact, remarkably fast.

Porting

I was unable to find a digital copy of the 3-LISP sources and so manually retyped the sources from the appendix of the thesis. The transcription in 3-lisp.lisp (2003 lines, 200K characters) preserves the original pagination and character set, see the comments at the top of the file. Transcription was mostly straightforward except for a few places where the PDF is illegible (for example, see here) all of which fortunately are within comment blocks.

The sources are in CADR machine dialect of LISP, which, save for some minimal and no longer relevant details, is equivalent to Maclisp.

3-LISP implementation does not have its own parser or interpreter. Instead, it uses flexibility built in a LISP reader (see, readtables) to parse, interpret and even compile 3-LISP with a very small amount of additional code. Amazingly, this more than 40 years old code, which uses arcane features like readtable customisation, runs on a modern Common Lisp platform after a very small set of changes: some functions got renamed (CASEQ to CASE, *CATCH to CATCH, etc.), some functions are missing (MEMQ, FIXP), some signatures changed (TYPEP, BREAK, IF). See 3-lisp.cl for details.

Unfortunately, the port does not run on all modern Common Lisp implementations, because it relies on the proper support for backquotes across recursive reader invocations:

;; Maclisp maintains backquote context across recursive parser

;; invocations. For example in the expression (which happens within defun

;; 3-EXPAND-PAIR)

;;

;; `\(PCONS ~,a ~,d)

;;

;; the backquote is consumed by the top-level activation of READ. Backslash

;; forces the switch to 3-lisp readtable and call to 3-READ to handle the

;; rest of the expression. Within this 3-READ activation, the tilde forces

;; switch back to L=READTABLE and a call to READ to handle ",a". In Maclisp,

;; this second READ activation re-uses the backquote context established by

;; the top-level READ activation. Of all Common Lisp implementations that I

;; tried, only sbcl correctly handles this situation. Lisp Works and clisp

;; complain about "comma outside of backquote". In clisp,

;; clisp-2.49/src/io.d:read_top() explicitly binds BACKQUOTE-LEVEL to nil.Among Common Lisp implementations I tried, only sbcl supports it properly. After reading Common Lisp Hyperspec, I believe that it is Maclisp and sbcl that implement the specification correctly and other implementations are faulty.

Conclusion

Procedural Reflection in Programming Languages is, in spite of its age, a very interesting read. Not only does it contain an implementation of a refreshingly new and bold idea (it is not even immediately obvious that infinite reflective towers can at all be implemented, not to say with any reasonable degree of efficiency), it is based on an interplay between mathematics and programming: the model of computation is proposed and afterward implemented in 3-LISP. Because the model is implemented in an actual running program, it has to be specified with extreme precision (which would make Tarski and Łukasiewicz tremble), and any execution of the 3-LISP interpreter validates the model.

„adaptive replacement better than Working Set?“ has (an implicit) idea: VM page replacement should keep a spectrum of accesses to a given page. Too high and too low frequences should be filtered out, because they:

represent correlated references, and

irrelevant,

respectively.

Groups usually come with homomorphisms, defined as mappings preserving multiplication:

$$f(a\cdot b) = f(a)\cdot f(b)$$From this definition, notions of subgroup (monomorphism), quotient group (epimorphism, normal subgroup) and the famous isomorphism theorem follow naturally. The category of groups with homomorphisms as arrows has products and sums, equalizers and coequalizers all well-known and with nice properties.

Consider, instead, affine morphism, that can be defined by the following equivalent conditions:

1. \(f(a \cdot b^{-1} \cdot c) = f(a) \cdot f^{-1}(b) \cdot f(c)\)

2. \(f(a \cdot b) = f(a) \cdot f^{-1}(e) \cdot f(b)\)

3. \(\exists t. f(a \cdot b) = f(a) \cdot t \cdot f(b)\)

The motivation for this definition is slightly roundabout.

The difference between homomorphism and affine morphism is similar to the difference between a vector subspace and an affine subspace of a vector space. A vector subspace always goes through the origin (for a homomorphism \(f\), \(f(e) = e\)), whereas an affine subspace is translated from the origin (\(f(e) \neq e\) is possible for an affine morphism).

Take points \(f(a)\) and \(f(b)\) in the image of an affine morphism, translate them back to the corresponding "vector subspace" to obtain \(f(a) \cdot f^{-1}(e)\) and \(f(b) \cdot f^{-1}(e)\). If translated points are multiplied and the result is translated back to the affine image, the resulting point should be the same as \(f(a \cdot b)\):

$$f(a \cdot b) = (f(a) \cdot f^{-1}(e)) \cdot (f(b) \cdot f^{-1}(e)) \cdot f(e) = f(a) \cdot f^{-1}(e) \cdot f(b)$$which gives the definition (2).

(1) => (2) immediately follows by substituting \(e\) for \(b\).

(2) => (3) by substituting \(f^{-1}(e)\) for \(t\).

(3) => (2) by substituting \(e\) for \(a\) and \(b\).

(2) => (1)

$$\begin{array}{r@{\;}c@{\;}l@{\quad}l@{\quad}} f(a \cdot b^{-1} \cdot c) &\;=\;& & \\ &\;=\;& f(a) \cdot f^{-1}(e) \cdot f(b^{-1} \cdot c) & \text{\{(2) for \(a \cdot (b^{-1} \cdot c)\)\}} \\ &\;=\;& f(a) \cdot f^{-1}(e) \cdot f(b^{-1}) \cdot f^{-1}(e) \cdot f(c) & \text{\{(2) for \(b^{-1} \cdot c\)\}} \\ &\;=\;& f(a) \cdot f^{-1}(e) \cdot f(b^{-1}) \cdot f^{-1}(e) \cdot f(b) \cdot f^{-1}(b) \cdot f(c) & \text{\{\(e = f(b) \cdot f^{-1}(b)\), working toward } \\ & & & \text{creating a sub-expression that can be collapsed by (2)\}} \\ &\;=\;& f(a) \cdot f^{-1}(e) \cdot f(b^{-1} \cdot b) \cdot f^{-1}(b) \cdot f(c) & \text{\{collapsing \(f(b^{-1}) \cdot f^{-1}(e) \cdot f(b)\) by (2)\}} \\ &\;=\;& f(a) \cdot f^{-1}(e) \cdot f(e) \cdot f^{-1}(b) \cdot f(c) & \text{\{\(b^{-1} \cdot b = e\)\}} \\ &\;=\;& f(a) \cdot f^{-1}(b) \cdot f(c) & \text{\{\(f^{-1}(e) \cdot f(e) = e\)\}} \end{array}$$It is easy to check that each homomorphism is an affine morphism (specifically, homomorphisms are exactly affine morphisms with \(f(e) = e\)).

Composition of affine morphisms is affine and hence groups with affine morphisms form a category \(\mathbb{Aff}\).

A subset of a group \(G\) is called an affine subgroup of \(G\) if one of the following equivalent conditions holds:

1. \(\exists h \in G:\forall p, q \in H \rightarrow (p \cdot h^{-1} \cdot q \in H \wedge h \cdot p^{-1} \cdot h \in H)\)

2. \(\forall p, q, h \in H \rightarrow (p \cdot h^{-1} \cdot q \in H \wedge h \cdot p^{-1} \cdot h \in H)\)

The equivalence (with a proof left as an exercise) means that if any \(h\) "translating" affine subgroup to a subgroup exists, then any member of the affine subgroup can be used for translation. In fact, any \(h\) that satisfies (1) belongs to \(H\) (prove). This matches the situation with affine and vector subspaces: any vector from an affine subspace translates this subspace to the subspace passing through the origin.

Finally for to-day, consider an affine morphism \(f:G_0\rightarrow G_1\). For \(t\in G_0\) define kernel:

$$ker_t f = \{g\in G_0 | f(g) = f(t)\}$$It's easy to check that a kernel is affine subgroup (take \(t\) as \(h\)). Note that in \(\mathbb{Aff}\) a whole family of subobjects corresponds to a morphism, whereas there is the kernel in \(\mathbb{Grp}\).

To be continued: affine quotients, products, sums, free affine groups.

Already in 1956 it was clear that one had to work with symbolic expressions to reach the goal of artifical intelligence. As the researchers already understood numerical computation would not have much importance.

-- Early LISP History (1956 - 1959)

Here is a little problem. Given an array \(A\) of \(N\) integers, find a sub-array (determined by a pair of indices within \(A\)) such that sum of elements in that sub-array is maximal among all sub-arrays of \(A\).

Sounds pretty easy? It is, but just to set a little standard for solutions: there is a way (shown to me by a 15-year-old boy) to do this in one pass over \(A\) and without using any additional storage except for few integer variables.

So much for dynamic programming...

update

That exercise is interesting, because while superfluously similar to the well-known tasks like finding longest common substring, etc. it can be solved in one pass.

Indeed Matti presented exactly the same solution that I had in mind. And it is possible (and instructive) to get a rigorous proof that this algorithm solves the problem.

Definition 0. Let \(A = (i, j)\) be a sub-array \((i <= j)\), then \(S(A) = S(i, j)\) denotes a sum of elements in \(A\). \(S(A)\) will be called sum of \(A\).

Definition 1. Let \(A = (i, j)\) be a sub-array \((i <= j)\), then for any

$$0 <= p < i < q < j < r < N$$sub-array \((p, i - 1)\) is called left outfix of \(A\), \((i, q)\) --- left infix of \(A\), \((q + 1, j)\) --- right infix of \(A\), and \((j + 1, r)\) --- right outfix of \(A\).

Definition 2. A sub-array is called optimal if all its infixes have positive sums, and all its outfixes have negative sums.

It's easy to prove the following:

Statement 0. A sub-array with the maximal sum is optimal.

Indeed, any non-optimal sub-array by definition has either negative infix, or positive outfix, and, hence, can be shrunken or expanded to another sub-array that has larger sum.

As an obvious corollary we get:

Statement 1. To find a sub-array with the maximal sum it's enough to find a sub-array with the maximal sum among all optimal sub-arrays.

The key fact that explains why our problem can be solved in one pass is captured in the following:

Statement 2. Optimal sub-arrays are not overlapping.

Also easily proved by observing that when two sub-arrays \(A\) and \(B\) overlap there always is an sub-array \(C\) that is an infix of \(A\) and an outfix of \(B\).

And, now the only thing left to do is to prove that Matti's algorithm calculates sums of (at least) all optimal sub-arrays. Which is easy to do:

first prove that possibly except for the initial value, new_start always points to the index at which at least one (and, therefore, exactly one) optimal array starts. This is easily proved by induction.

then prove that once particular value of new_start has been reached, new_start is not modified until i reaches upper index of optimal array starting at new_start. This follows directly from the definition of optimal sub-array, because all its left infixes have positive sums.

this means that for any optimal sub-array \((p, q)\) there is an iteration of the loop at which

$$new\_start == p \quad\text{and}\quad i == q$$As algorithm finds maximal sum among all \((new\_start, i)\) sub-arrays, it finds maximal sum among all optimal arrays, and, by Statement 1, maximal sum among all sub-arrays.

\( \def\List{\operatorname{List}} \) \( \def\integer{\operatorname{integer}} \) \( \def\nat{\operatorname{nat}} \) \( \def\Bool{\operatorname{Bool}} \) \( \def\Punct{\operatorname{Punct}} \) \( \def\BT{\operatorname{BT}} \) \( \def\B{\operatorname{B}} \) \( \def\T{\operatorname{T}} \) \( \def\R{\operatorname{R}} \) \( \def\Set{\operatorname{Set}} \) \( \def\Bag{\operatorname{Bag}} \) \( \def\Ring{\operatorname{Ring}} \) \( \def\plug{\operatorname{plug}} \) \( \def\Zipper{\operatorname{Zipper}} \) \( \def\cosh{\operatorname{cosh}} \) \( \def\sinh{\operatorname{sinh}} \) \( \def\ch{\operatorname{ch}} \) \( \def\sh{\operatorname{sh}} \) \( \def\d{\partial} \)

There is that curious idea that you can think of a type in a programming language as a kind of algebraic object. Take (homogeneous) lists for example. A list of integers is either an empty list (i.e., nil) or a pair of an integer (the head of the list) and another list (the tail of the list). You can symbolically write this as

Here \(1\) is the unit type with one element, it does not matter what this element is exactly. \(A + B\) is a disjoint sum of \(A\) and \(B\). It is a tagged union type, whose values are values of \(A\) or \(B\) marked as such. \(A \cdot B\) is the product type. Its values are pairs of values of \(A\) and \(B\).

The underlying mathematical machinery includes "polynomial functors", "monads", "Lambek's theorem", etc. You can stop digging when you reach "Knaster-Tarski theorem" and "Beck's tripleability condition".

In general, we have

$$\List(x) = 1 + x \cdot \List(x)$$In Haskell this is written as

data List x = Nil | Cons x (List x)Similarly, a binary tree with values of type \(x\) at the nodes can be written as

$$\BT(x) = 1 + x\cdot \BT(x) \cdot \BT(x)$$That is, a binary tree is either empty or a triple of the root, the left sub-tree and the right sub-tree. If we are only interested in the "shapes" of the binary trees we need

$$\B = \BT(1) = 1 + \B^2$$Nothing too controversial so far. Now, apply some trivial algebra:

$$\begin{array}{r@{\;}c@{\;}l@{\quad}} \List(x) &\;=\;& 1 + x \cdot \List(x) \\ \List(x) - x \cdot \List(x) &\;=\;& 1 \\ \List(x) \cdot (1 - x) &\;=\;& 1 \\ \List(x) &\;=\;& \frac{1}{1 - x} \\ \List(x) &\;=\;& 1 + x + x^2 + x^3 + \cdots \end{array}$$Going from \(\List(x) - x \cdot \List(x)\) to \(\List(x) \cdot (1 - x)\) in the middle step arguably makes some sense: product of sets and types distributes over sum, much like it does for integers. But the rest is difficult to justify or even interpret. What is the difference of types or their infinite series?

The last equation, however, is perfectly valid: an element of \(\List(x)\) is either nil, or a singleton element of \(x\), or a pair of elements of \(x\) or a triple, etc. This is somewhat similar to the cavalier methods of eighteenth-century mathematicians like Euler and the Bernoulli brothers, who would boldly go where no series converged before and through a sequence of meaningless intermediate steps arrive to a correct result.

One can apply all kinds of usual algebraic transformations to \(\List(x) = (1 - x)^{-1}\). For example,

$$\begin{array}{r@{\;}c@{\;}l@{\quad}l@{\quad}} \List(a + b) &\;=\;& \frac{1}{1 - a - b} \\ &\;=\;& \frac{1}{1 - a}\cdot\frac{1}{1 - \frac{b}{1 - a}} \\ &\;=\;& \List(a)\cdot\List(\frac{b}{1 - a}) \\ &\;=\;& \List(a)\cdot\List(b\cdot\List(a)) \end{array}$$This corresponds to the regular expressions identity \((a|b)^* = a^*(ba^*)^*\).

Apply the same trick to binary trees:

$$\begin{array}{r@{\;}c@{\;}l@{\quad}l@{\quad}} \BT &\;=\;& 1 + \BT^2 \cdot x & \\ \BT^2 \cdot x - \BT + 1 &\;=\;& 0 & \text{(Solve the quadratic equation.)} \\ \BT &\;=\;& \frac{1\pm\sqrt{1-4\cdot x}}{2\cdot x} & \text{(Use binomial theorem)} \\ \sqrt{1-4\cdot x} &\;=\;& \sum_{k=0}^\infty \binom{\tfrac12}{k}(-4\cdot x)^k & \\ \BT(x) &\;=\;& 1 + x + 2\cdot x^2 + 5\cdot x^3 + 14\cdot x^4 + 42\cdot x^5 + \cdots & \\ &\;=\;& \sum_{n=0}^\infty C_n\cdot x^n, & \end{array}$$Where \(C_n=\frac{1}{n+1}\binom{2\cdot n}{n}\)—the \(n\)-th Catalan number, that is the number of binary trees with \(n\) nodes. To understand the last series we need to decide what \(n\cdot x\) is, where \(n\) is a non-negative integer and \(x\) is a type. Two natural interpretations are

$$n \cdot x = x + x + \cdots = \sum_0^{n - 1} x$$and

$$n \cdot x = \{0, \ldots, n - 1\} \cdot x$$which both mean the same: an element of \(n\cdot x\) is an element of \(x\) marked with one of \(n\) "branches", and this is the same as a pair \((i, t)\) where \(0 <= i < n\) and \(t\) is an element of \(x\).

The final series then shows that a binary tree is either an empty tree (\(1\)) or an \(n\)-tuple of \(x\)-es associated with one of \(C_n\) shapes of binary trees with \(n\) nodes. It worked again!

Now, watch carefully. Take simple unmarked binary trees: \(\B = \BT(1) = 1 + \B^2\). In this case, we can "calculate" the solution exactly:

$$\B^2 - \B + 1 = 0$$hence

$$\B = \frac{1 \pm \sqrt{1 - 4}}{2} = \frac{1}{2} \pm i\cdot\frac{3}{2} = e^{\pm i\cdot\frac{\pi}{3}}$$Both solutions are sixth-degree roots of \(1\): \(\B^6 = 1\). OK, this is still completely meaningless, right? Yes, but it also means that \(\B^7 = \B\), which --if correct-- would imply that there is a bijection between the set of all binary trees and the set of all 7-tuples of binary trees. Haha, clearly impossible... Actually, it's a more or less well-known fact.

A note is in order. All "sets" and "types" that we talked about so far are at most countably infinite so, of course, there exists a bijection between \(\B\) and \(\B^7\) simply because both of these sets are countable. What is interesting, and what the paper linked to above explicitly establishes, is that there is as they call it "a very explicit" bijection between these sets, that is a "structural" bijection that does not look arbitrarily deep into its argument trees. For example, in a functional programming language, such a bijection can be coded through pattern matching without recursion.

Here is another neat example. A rose tree is a kind of tree datatype where a node has an arbitrary list of children:

$$\R(x) = x\cdot\List(\R(x))$$Substituting \(\List(x) = (1 - x)^{-1}\) we get

$$\R(x) = \frac{x}{1 - R(x)}$$or

$$\R^2(x) - \R(x) + x = 0$$Hence \(\R = \R(1)\) is defined by the same equation as \(\B = \BT(1)\): \(\R^2 - \R + 1 = 0\). So, there must be a bijection between \(\R\) and \(\B\), and of course there is: one of the first things LISP hackers realised in the late 1950s is that an arbitrary tree can be encoded with cons cells.

Mathematically, this means that instead of "initial algebras" we treat types as "final co-algebras".

All this is, by the way, is equally applicable to infinite datatypes (that is, streams, branching transition systems, etc.)

That was pure algebra, there is nothing "analytical" here. Analysis historically started with the notion of derivative. And sure, you can define and calculate derivatives of data type constructors like \(\List\) and \(\BT\). These derivatives are generalisations of zipper-like structures: they represent "holes" in the data-type, that can be filled by an instance of the type-parameter.

For example, suppose you have a list \((x_0, \ldots, x_k, \ldots, x_{n - 1})\) of type \(\List(x)\) then a hole is obtained by cutting out the element \(x_k\) that is \((x_0, \ldots, x_{k-1}, \blacksquare, x_{k+1}, \ldots, x_{n - 1})\) and is fully determined by the part of the list to the left of the hole \((x_0, \ldots, x_{k - 1})\) and the part to the right \((x_{k+1}, \ldots, x_{n - 1})\). Both of these can be arbitrary lists (including empty), so a hole is determined by a pair of lists and we would expect \(\d \List(x) = \List^2(x)\). But Euler would have told us this immediately, because \(\List(x) = (1 - x)^{-1}\) hence

$$\d \List(x) = \d (1 - x)^{-1} = (1 - x)^{-2} = \List^2(x)$$In general the derivative of a type constructor (of a "function" analytically speaking) \(T(x)\) comes with a "very explicit" surjective function

$$\plug : \d T(x) \cdot x \to T(x)$$injective on each of its arguments, and has the usual properties of a derivative, among others:

$$\begin{array}{r@{\;}c@{\;}l@{\quad}} \d (A + B) &\;=\;& \d A + \d B \\ \d (A \cdot B) &\;=\;& \d A \cdot B + A \cdot \d B \\ \d_x A(B(x)) &\;=\;& \d_B A(B) \cdot \d_x B(x) \\ \d x &\;=\;& 1 \\ \d x^n &\;=\;& n\cdot x^{n-1} \\ \d n &\;=\;& 0 \end{array}$$For binary trees we get

$$\begin{array}{r@{\;}c@{\;}l@{\quad}} \d \BT(x) &\;=\;& \d (1 + x\cdot \BT^2(x)) \\ &\;=\;& 0 + \BT^2(x) + 2\cdot x\cdot \BT(x) \cdot \d \BT(x) \\ &\;=\;& \BT^2(x) / (1 - 2\cdot x\cdot \BT(x)) \\ &\;=\;& \BT^2(x) \cdot \List(2\cdot x\cdot \BT(x)) \\ &\;=\;& \BT^2(x) \cdot \Zipper_2(x) \end{array}$$Here \(\Zipper_2(x) = \List(2\cdot x\cdot \BT(x))\) is the classical Huet's zipper for binary trees. We have an additional \(\BT^2(x)\) multiplier, because Huet's zipper plugs an entire sub-tree, that is, it's a \(\BT(x)\)-sized hole, whereas our derivative is an \(x\)-sized hole.

For \(n\)-ary trees \(\T = 1 + x\cdot \T^n(x)\) we similarly have

$$\begin{array}{r@{\;}c@{\;}l@{\quad}} \d \T(x) &\;=\;& \d (1 + x\cdot \T^n(x)) \\ &\;=\;& 0 + \T^n(x) + n\cdot x\cdot \T^{n-1}(x) \cdot \d \T(x) \\ &\;=\;& \T^n(x) / (1 - n\cdot x\cdot \T^{n -1}(x)) \\ &\;=\;& \T^n(x) \cdot \List(n\cdot x\cdot \T^{n - 1}(x)) \\ &\;=\;& \T^n(x) \cdot \Zipper_n(x) \end{array}$$At this point we have basic type constructors for arrays (\(x^n\)), lists and variously shaped trees, which we can combine and compute their derivatives. We can also try constructors with multiple type parameters (e.g., trees with different types at the leaves and internal nodes) and verify that the usual partial-differentiation rules apply. But your inner Euler must be jumping up and down: much more is possible!

Indeed it is. Recall the "graded" representation of \(\List(x)\):

$$\List(x) = 1 + x + x^2 + x^3 + \cdots$$This formula says that there is one (i.e, \(|x|^0\)) list of length \(0\), one list (of length \(1\)) for each element of \(x\), one list for each ordered pair of elements of \(x\), for each ordered triple, etc. What if we forget the order of \(n\)-tuples? Then, by identifying each of the possible \(n!\) permutations, we would get, instead of lists with \(n\) elements, multisets (bags) with \(n\) elements.

$$\Bag(x) = \frac{1}{0!} + \frac{x}{1!} + \frac{x^2}{2!} + \cdots + \frac{x^k}{k!} + \cdots = e^x$$[0]

Formally we move from polynomial functors to "analytic functors" introduced by A. Joyal.

This counting argument, taken literally, does not hold water. For one thing, it implies that every term in \(e^x\) series is an integer (hint: there are no \(n!\) \(n\)-tuples belonging to the same class as \((t, t, \ldots, t)\)). But it drives the intuition in the right direction and \(\Bag(x)\) does have the same properties as the exponent. Before we demonstrate this, let's look at another example.

A list of \(n\) elements is one of \(|x|^n\) \(n\)-tuples of elements of \(x\). A bag with \(n\) elements is one of such tuples, considered up to a permutation. A set with \(n\) elements is an \(n\)-tuple of different elements considered up to a permutation. Hence, where for lists and bags we have \(x^n\), sets have \(x\cdot(x - 1)\cdot\cdots\cdot(x - n + 1)\) by the standard combinatorial argument: there are \(x\) options for the first element, \(x - 1\) options for the second element and so on.

That is,

$$\begin{array}{r@{\;}c@{\;}l@{\quad}} \Set(x) &\;=\;& 1 + \frac{x}{1!} + \frac{x\cdot(x - 1)}{2!} + \frac{x\cdot(x - 1)\cdot(x - 2)}{3!} + \cdots + \frac{x\cdot(x - 1)\cdot\cdots\cdot(x - k + 1)}{k!} + \cdots \\ &\;=\;& (1 + 1)^x \;\;\;\;\text{(By binomial)} \\ &\;=\;& 2^x \end{array}$$OK, we have \(\Bag(x) = e^x\) and \(\Set(x) = 2^x\). The latter makes sense from the cardinality point of view: there are \(2^x\) subsets of set \(x\) (even when \(x\) is infinite). Moreover, both \(\Set\) and \(\Bag\) satisfy the basic exponential identity \(\alpha^{x + y} = \alpha^x\cdot\alpha^y\). Indeed a bag of integers and Booleans can be uniquely and unambiguously separated into the bag of integers and the bag of Booleans, that can be poured back together to produce the original bag, and the same for sets. This does not work for lists: you can separate a list of integers and Booleans into two lists, but there is no way to restore the original list from the parts. This provides a roundabout argument, if you ever need one, that function \((1 - x)^{-1}\) does not have form \(\alpha^x\) for any \(\alpha\).

Next, \(\alpha^x\) is the type of functions from \(x\) to \(\alpha\), \(\alpha^x = [x \to \alpha]\). For sets this means that an element of \(\Set(x)\), say \(U\), can be thought of as the characteristic function \(U : x \to \{0, 1\}\)

$$U(t) = \begin{cases} 1, & t \in U,\\ 0, & t \notin U. \end{cases}$$For \(\Bag(x)\) we want to identify a bag \(V\) with a function \(V : x \to e\). What is \(e\)? By an easy sleight of hand we get:

$$e = e^1 = \Bag(1) = \{ 0, 1, \ldots \} = \nat$$(Because for the one-element unit type \(1\), a bag from \(\Bag(1)\) is fully determined by the (non-negative) number of times the only element of \(1\) is present in the bag.)

Hence, we have \(\Bag(x) = \nat^x = [x \to \nat]\), which looks surprisingly reasonable: a bag of \(x\)-es can be identified with the function that for each element of \(x\) gives the number of occurrences of this element in the bag. (Finite) bags are technically "free commutative monoids" generated by \(x\).

Moreover, the most famous property of \(e^x\) is that it is its own derivative, so we would expect

$$\d \Bag(x) = \Bag(x)$$And it is: if you take a bag and make a hole in it, by removing one of its elements, you get nothing more and nothing less than another bag. The "plug" function \(\plug : \Bag(x)\cdot x \to \Bag(x)\) is just multiset union:

$$\plug (V, t) = V \cup \{t\},$$which is clearly "very explicit" and possesses the required properties of injectivity and surjectivity. This works neither for lists, trees and arrays (because you have to specify where the new element is to be added) nor for sets (because if you add an element already in the set, the plug-map is not injective).

The central rôle of \(e^x\) in analysis indicates that perhaps it is multiset, rather than list, that is the most important datastructure.

If we try to calculate the derivative of \(\Set(x)\) we would get \(\d\Set(x) = \d(2^x) = \d e^{\ln(2)\cdot x} = \ln(2)\cdot 2^x = \ln(2)\cdot\Set(x)\), of which we cannot make much sense (yet!), but we can calculate

$$\Bag(\d\Set(x)) = e^{\d\Set(x)} = e^{\ln(2)\cdot\Set(x)} = 2^{Set(x)} = \Set(\Set(x))$$Nice. Whatever the derivative of \(\Set(x)\) is, a bag of them is a set of sets of \(x\).

Thinking about a type constructor \(T(x) = \sum_{i>0} a_i\cdot x^i\) as an non-empty (\(i > 0\)) container, we can consider its pointed version, where a particular element in the container is marked:

$$\begin{array}{r@{\;}c@{\;}l@{\quad}} \Punct(T(x)) &\;=\;& \sum_{i>0} i\cdot a_i\cdot x^i \\ &\;=\;& x\cdot \sum_{i>0} i\cdot a_i\cdot x^{i-1} \\ &\;=\;& x\cdot \sum_{i>0} a_i\cdot \d x^i \\ &\;=\;& x\cdot \d \sum_{i>0} a_i\cdot x^i \\ &\;=\;& x\cdot \d T(x) \end{array}$$This has clear intuitive explanation: if you cut a hole in a container and kept the removed element aside, you, in effect, marked the removed element.

When I first studied these functions long time ago, they used to be called \(\sh\) and \(\ch\), pronounced roughly "shine" and "coshine". The inexorable march of progress...

The next logical step is to try to recall as many Taylor expansions as one can to see what types they correspond to. Start with the easy ones: hyperbolic functions

$$\cosh(x) = 1 + \frac{x^2}{2!} + \frac{x^4}{4!} + \cdots$$and

$$\sinh(x) = x + \frac{x^3}{3!} + \frac{x^5}{5!} + \cdots$$\(\cosh(x)\) is the type of bags of \(x\) with an even number of elements and \(\sinh(x)\) is the type of bags of \(x\) with an odd number of elements. Of course, \(\cosh\) and \(\sinh\) happen to be an even and an odd function respectively. So to answer the question in the title: \(\cosh(\List(\Bool))\) is the type of bags of even number of lists of Booleans. It is easy to check that all usual hyperbolic trigonometry identities hold.

We can also write down a general function type \([ x \to (1 + y) ]\):

$$[ x \to (1 + y) ] = (1 + y)^x = 1 + y\cdot x + y^2\cdot\frac{x\cdot(x - 1)}{2!} + y^3\cdot\frac{x\cdot(x-1)\cdot(x-2)}{3!} + \cdots$$Combinatorial interpretation(s) (there are at least two of them!) are left to the curious reader.

We have by now seen the series with the terms of the form \(n_k\cdot x^k\) (\(n_k \in \nat\)) corresponding to various tree-list types, the series with the terms \(n_k\cdot\frac{x^k}{k!}\) corresponding to function-exponent types. What about "logarithm"-like series with terms of the form \(n_k\cdot\frac{x^k}{k}\)? Similarly to the exponential case, we try to interpret them as types where groups of \(n\) \(n\)-tuples are identified. The obvious candidates for such groups are all possible rotations of an \(n\)-tuple. The learned name for \(n\)-tuples identified up to a rotation is "an (aperiodic) necklace", we will call it a ring. The type of non-empty rings of elements of \(x\) is given by

$$\Ring(x) = x + \frac{x^2}{2} + \frac{x^3}{3} + \cdots$$Compare with:

$$\begin{array}{r@{\;}c@{\;}l@{\quad}} \ln(1 + x) &\;=\;& x - \frac{x^2}{2} + \frac{x^3}{3} - \frac{x^4}{4} + \cdots \\ \ln(1 - x) &\;=\;& - x - \frac{x^2}{2} - \frac{x^3}{3} - \frac{x^4}{4} - \cdots \\ - \ln(1 - x) &\;=\;& x + \frac{x^2}{2} + \frac{x^3}{3} + \frac{x^4}{4} + \cdots \\ &\;=\;& \Ring(x) \end{array}$$Now comes the cool part:

$$\Bag(\Ring(x)) = e^{\Ring(x)} = e^{- \ln(1 - x)} = (1 - x)^{-1} = \List(x)$$We are led to believe that for any type there is a "very explicit" bijection between bags of rings and lists. That is, it is possible to decompose any list to a multiset of rings (containing the same elements, because nothing about \(x\) is given) in such a way that the original list can be unambiguously recovered from the multiset.

Sounds pretty far-fetched? It is called Chen–Fox–Lyndon theorem, by the way. This theorem has a curious history. The most accessible exposition is in De Bruijn, Klarner, Multisets of aperiodic cycles (1982). The "Lyndon words" term is after Lyndon, On Burnside's Problem (1954). The original result is in Ширшов, Подалгебры свободных лиевых алгебр (1953), where it is used to estimate dimensions of sub-algebras of free Lie algebras. See also I.57 (p. 85) in Analytic Combinatorics by Flajolet and Sedgewick.

If you are still not convinced that \(\Ring(x) = -\ln(1 - x)\), consider

$$\d\Ring(x) = -\d\ln(1 - x) = (1 - x)^{-1} = \List(x)$$If you cut a hole in a ring, you get a list!

We still have no interpretation for alternating series like \(\ln(1 + x) = x - x^2/2 + x^3/3 - x^4/4 + \cdots\). Fortunately, our good old friend \(\List(x) = (1 - x)^{-1}\) gives us

$$\List(2) = -1$$which, together with the inclusion–exclusion principle, can be used to concoct plausibly looking explanations of types like \(\sin(x) = x - x^3/3! + x^5/5! + \cdots\), etc.

Of course, (almost) all here can be made formal and rigorous. Types under \(+\) and \(\cdot\) form a semiring. The additive structure can be extended to an abelian group by the Grothendieck group construction. The field of fractions can be built. Puiseux and Levi-Civita series in this field provide "analysis" without the baggage of limits and topology.

But they definitely had more fun back in the eighteenth century. Can we, instead of talking about "fibre bundles over \(\Ring(\lambda t.t\cdot x)\)", dream of Möbius strips of streams?

BSD vm analyzes object reference counter in page scanner (vm_pageout.c:vm_pageout_scan()):

/*

* If the object is not being used, we ignore previous

* references.

*/

if (m->object->ref_count == 0) {

vm_page_flag_clear(m, PG_REFERENCED);

pmap_clear_reference(m);

}

....

/*

* Only if an object is currently being used, do we use the

* page activation count stats.

*/

if (actcount && (m->object->ref_count != 0)) {

vm_pageq_requeue(m);

}

....

Enumerate all binary trees with N nodes, C++20 way:

#include <memory>

#include <string>

#include <cassert>

#include <iostream>

#include <coroutine>

#include <cppcoro/generator.hpp>

struct tnode;

using tree = std::shared_ptr<tnode>;

struct tnode {

tree left;

tree right;

tnode() {};

tnode(tree l, tree r) : left(l), right(r) {}

};

auto print(tree t) -> std::string {

return t ? (std::string{"["} + print(t->left) + " "

+ print(t->right) + "]") : "*";

}

cppcoro::generator<tree> gen(int n) {

if (n == 0) {

co_yield nullptr;

} else {

for (int i = 0; i < n; ++i) {

for (auto left : gen(i)) {

for (auto right : gen(n - i - 1)) {

co_yield tree(new tnode(left, right));

}

}

}

}

}

int main(int argc, char **argv) {

for (auto t : gen(std::atoi(argv[1]))) {

std::cout << print(t) << std::endl;

}

}Source: gen.cpp.

To generate Catalan numbers, do:

$ for i in $(seq 0 1000000) ;do ./gen $i | wc -l ;done

1

1

2

5

14

42

132

429

1430

4862

16796

58786

208012

742900

Abstract: Dual of the familiar construction of the graph of a function is considered. The symmetry between graphs and cographs can be extended to a suprising degree.

Given a function \(f : A \rightarrow B\), the graph of f is defined as

$$f^* = \{(x, f(x)) \mid x \in A\}.$$In fact, within ZFC framework, functions are defined as graphs. A graph is a subset of the Cartesian product \(A \times B\). One might want to associate to \(f\) a dual cograph object: a certain quotient set of the disjoint sum \(A \sqcup B\), which would uniquely identify the function. To understand the structure of the cograph, define the graph of a morphism \(f : A \rightarrow B\) in a category with suitable products as a fibred product:

$$\begin{CD} f^* @>\pi_2>> B\\ @V \pi_1 V V @VV 1_B V\\ A @>>f> B \end{CD}$$In the category of sets this gives the standard definition. The cograph can be defined by a dual construction as a push-out:

$$\begin{CD} A @>1_A>> A\\ @V f V V @VV j_1 V\\ B @>>j_2> f_* \end{CD}$$Expanding this in the category of sets gives the following definition:

$$f_* = (A \sqcup B) / \pi_f,$$where \(\pi_f\) is the reflexive transitive closure of a relation \(\theta_f\) given by (assuming in the following, without loss of generality, that \(A\) and \(B\) are disjoint)

$$x\overset{\theta_f}{\sim} y \equiv y = f(x)$$That is, \(A\) is partitioned by \(\pi_f\) into subsets which are inverse images of elements of \(B\) and to each such subset the element of \(B\) which is its image is glued. This is somewhat similar to the mapping cylinder construction in topology. Some similarities between graphs and cographs are immediately obvious. For graphs:

$$\forall x\in A\; \exists! y\in B\; (x, y)\in f^*$$ $$f(x) = \pi_2((\{x\}\times B) \cap f^*)$$ $$f(U) = \{y \mid y = f(x) \wedge x\in U \} = \pi_2((U\times B)\cap f^*)$$where \(x\in A\) and \(U\subseteq A\). Similarly, for cographs:

$$\forall x\in A\; \exists! y\in B\; [x] = [y]$$ $$f(x) = [x] \cap B$$ $$f(U) = (\bigcup [U])\cap B$$where \([x]\) is the equivalance set of \(x\) w.r.t. \(\pi_f\) and \([U] = \{[x] \mid x \in U\}\). For inverse images:

$$f^{-1}(y) = \pi_1((A \times \{y\}) \cap f^*) = [y] \cap A$$ $$f^{-1}(S) = \pi_1((A \times S) \cap f^*) = (\bigcup [S])\cap A$$where \(y\in B\) and \(S\subseteq B\).

A graph can be expressed as

$$f^* = \bigcup_{x \in A}(x, f(x))$$To write out a similar representation of a cograph, we have to recall some elementary facts about equivalence relations.

Given a set \(A\), let \(Eq(A) \subseteq Rel(A) = P(A \times A)\) be the set of equivalence relations on \(A\). For a relation \(\pi \subseteq A \times A\), we have

$$\pi \in Eq(A) \;\; \equiv \;\; \pi^0 = \Delta \subseteq \pi \; \wedge \; \pi^n = \pi, n \in \mathbb{Z}, n \neq 0.$$To each \(\pi\) corresponds a surjection \(A \twoheadrightarrow A/\pi\). Assuming axiom of choice (in the form "all epics split"), an endomorphism \(A \twoheadrightarrow A/\pi \rightarrowtail A\) can be assigned (non-uniquely) to \(\pi\). It is easy to check, that this gives \(Eq(A) = End(A) / Aut(A)\), where \(End(A)\) and \(Aut(A)\) are the monoid and the group of set endomorphisms and automorphisms respectively, with composition as the operation (\(Aut(A)\) is not, in general, normal in \(End(A)\), so \(Eq(A)\) does not inherit any useful operation from this quotient set representation.). In addition to the monoid structure (w.r.t. composition) that \(Eq(A)\) inherits from \(Rel(A)\), it is also a lattice with infimum and supremum given by

$$\pi \wedge \rho = \pi \cap \rho$$ $$\pi \vee \rho = \mathtt{tr}(\pi \cup \rho) = \bigcup_{n \in \mathbb{N}}(\pi \cup \rho)^n$$For a subset \(X\subseteq A\) define an equivalence relation \(e(X) = \Delta_A \cup (X\times X)\), so that

$$x\overset{e(X)}{\sim} y \equiv x = y \vee \{x, y\} \subseteq X$$(Intuitively, \(e(X)\) collapses \(X\) to one point.) It is easy to check that

$$f_* = \bigvee_{x \in A}e(\{x, f(x)\})$$which is the desired dual of the graph representation above.

I just realised that in any unital ring commutativity of addition follows from distributivity:

$$$$\begin{array}{r@{\;}c@{\;}l@{\quad}} a + b &\;=\;& -a + a + a + b + b - b \\ &\;=\;& -a + a\cdot(1 + 1) + b\cdot(1 + 1) - b \\ &\;=\;& -a + (a + b)\cdot(1 + 1) - b \\ &\;=\;& -a + (a + b)\cdot 1 + (a + b)\cdot 1 - b \\ &\;=\;& -a + a + b + a + b - b \\ &\;=\;& b + a \end{array}

The same holds for unital modules, algebras, vector spaces, &c. Note that multiplication doesn't even need to be associative. It's amazing how such things can pass unnoticed.

[0]

It occurred to me that new C features added by C99 standard can be used to implement safe variadic functions that is, something syntactically looking like normal call of function with variable number of arguments, but in effect calling function with all arguments packed into array, and with array size explicitly supplied:

#define sizeof_array(arr) ((sizeof (arr))/(sizeof (arr)[0]))

#define FOO(a, ...) \

foo((a), (char *[]){ __VA_ARGS__, 0 }, sizeof_array(((char *[]){ __VA_ARGS__ })))

int foo(int x, char **str, int n) {

printf("%i %i\n", x, n);

while (n--)

printf("%s\n", *(str++));

printf("last: %s\n", *str);

}

int main(int argc, char **argv)

{

FOO(1, "a", "boo", "cooo", "dd", argv[0]);

}this outputs

1 5

a

boo

cooo

dd

./a.out

last: (null)Expect me to use this shortly somewhere.

Concurrency Control and Recovery in Database Systems by Philip A. Bernstein, Vassos Hadzilacos, and Nathan Goodman.

Delayed commit as an alternative to keeping "commit-time" locks: when transaction T1 is accessing a resource modified by active transaction T2, it just proceeds hoping that T2 will commit. If T1 tries to commit while T2 is still active, its commit is delayed until T2 commits or aborts. If T2 aborts, T1 has to abort too (and can be restarted). One can imagine "abort-rate" tracked for each resource, and delayed commits are used only if it is low enough.

Pragmatically, this means that read locks can be released when the transaction terminates (i.e., when the scheduler receives the transaction's Commit or Abort), but write locks must be held until after the transaction commits or aborts (i.e., after the DM processes the transaction's Commit or Abort).

This strategy ("Static 2PL scheduler") generates strict histories. How it generalizes to other types of locks?

Interesting section on thrashing:

pure restart policy (abort transaction when it tries to acquire already held lock) works well when data contention is high.

a measure of thrashing:

$$W = k \cdot k \cdot N / D,$$where

\(N\) - multiprogramming level,

\(k\) - number of locks required by transaction,

\(D\) - number of lockable entities.

thrashing starts when \(W >= 1.5\).

Can be applied to resource (memory) trashing too.

As a simple example, when Windows started up, it increased the size of msdos's internal file table (the SFT, that's the table that was created by the FILES= line in config.sys). It did that to allow more than 20 files to be opened on the windows system (a highly desirable goal for a multi-tasking operating system). But it did that by using an undocumented API call, which returned a pointer to a set of “interesting” pointers in msdos. It then indexed a known offset relative to that pointer, and replaced the value of the master SFT table with its own version of the SFT. When I was working on msdos 4.0, we needed to support Windows. Well, it was relatively easy to guarantee that our SFT was at the location that Windows was expecting. But the problem was that the msdos 4.0 SFT was 2 bytes larger than the msdos 3.1 SFT. In order to get Windows to work, I had to change the DOS loader to detect when win.com was being loaded, and if it was being loaded, I looked at the code at an offset relative to the base code segment, and if it was a MOV instruction, and the amount being moved was the old size of the SFT, I patched the instruction in memory to reflect the new size of the SFT! Yup, msdos 4.0 patched the running windows binary to make sure Windows would still continue to work.

And these are the people who design and implement most widely used software in the world. Which is a scary place indeed.

"The late André Bensoussan worked with me on the Multics .... We were working on a major change to the file system ...

André took on the job of design, implementation, and test of the VTOC manager. He started by sitting at his desk and drawing a lot of diagrams. I was the project coordinator, so I used to drop in on him and ask how things were going. "Still designing," he'd say. He wanted the diagrams to look beautiful and symmetrical as well as capturing all the state information. ... I was glad when he finally began writing code. He wrote in pencil, at his desk, instead of using a terminal. ...

Finally André took his neat final pencil copy to a terminal and typed the whole program in. His first compilation attempt failed; he corrected three typos, tried again, and the code compiled. We bound it into the system and tried it out, and it worked the first time.

In fact, the VTOC manager worked perfectly from then on. ...

How did André do this, with no tool but a pencil?

Those people were programming operating system kernel that supported kernel level multi-threading and SMP (in 60s!), had most features UNIX kernels have now and some that no later operating system has, like transparent HSM. All under hardware constraints that would make modern washing machine to look like a super-computer. Of course they were thinking, then typing.

There is a typical problem with Emacs experienced by people frequently switching between different keyboard mappings, for example, to work with non ASCII languages: fundamental Emacs keyboard shortcuts (and one has to invoke them a lot to use Emacs efficiently) all use ASCII keys. For example, when I want to save my work, while entering some Russian text, I have to do something like Alt-Space (switch to the US keyboard layout) Control-x-s (a Emacs shortcut to save buffers) Alt-Space (shift back to the Cyrillic keyboard layout). Switching out and back to a keyboard layout only to tap a short key sequence is really annoying.

Two solutions are usually proposed:

Duplicate standard key sequences in other keyboard layouts. For example,

(global-set-key [(control ?ч ?ы)] 'save-some-buffers)expression in .emacs binds Control-ч-ы to the same command as Control-x-s is usually bound. This eliminates the need to switch layout, because ч-`x` (and ы-`s`) symbols are located on the same key (in QWERTY and JCUKEN layouts respectively).

Another solution employs the fact that Emacs is a complete operating system and, therefore, has its own keyboard layout switching mechanism (bound to Control-\ by default). When this mechanism is used, Emacs re-interprets normals keys according to its internal layout, so that typing s inserts ы when in internal Russian mode, while all command sequences continue to work independently of layout. The mere idea of having two independent layout switching mechanisms and two associated keyboard state indicators is clearly ugly beyond words (except for people who use Emacs as their /sbin/init).

Fortunately, there is another way:

; Map Modifier-CyrillicLetter to the underlying Modifier-LatinLetter, so that

; control sequences can be used when keyboard mapping is changed outside of

; Emacs.

;

; For this to work correctly, .emacs must be encoded in the default coding

; system.

;

(mapcar*

(lambda (r e) ; R and E are matching Russian and English keysyms

; iterate over modifiers

(mapc (lambda (mod)

(define-key input-decode-map

(vector (list mod r)) (vector (list mod e))))

'(control meta super hyper))

; finally, if Russian key maps nowhere, remap it to the English key without

; any modifiers

(define-key local-function-key-map (vector r) (vector e)))

"йцукенгшщзхъфывапролджэячсмитьбю"

"qwertyuiop[]asdfghjkl;'zxcvbnm,.")(Inspired by a cryptic remark about "Translation Keymaps" in vvv's .emacs.)

Licence my roving hands, and let them go,

Before, behind, between, above, below.

O my America! my new-found-land

-- J. Donne, 1633.

Блуждающим рукам моим дай разрешенье,

Пусти вперед, назад, промеж, и вверх, и вниз,

О дивный новый мир, Америка моя!

Variant reading reeing instead of roving is even better. I hope that "О дивный новый мир" (O brave new world) is not entirely anachronistic.

Paul Turner gave a talk about new threading interface, designed by Google, at this year Linux Plumbers Conference:

The idea is, very roughly, to implement the ucontext interface with kernel support. This gives the benefits of kernel threads (e.g., SMP support), while retaining fast context switches of user threads. switchto_switch(tid) call hands off control to the specified thread without going through the kernel scheduler. This is like swapcontext(3), except that kernel stack pointer is switched too. Of course, there is still an overhead of the system call and return, but it is not as important as it used to be: the cost of normal context switch is dominated by the scheduler invocation (with all the associated locking), plus, things like TLB flushes drive the difference between user and kernel context switching further down.

I did something similar (but much more primitive) in 2001. The difference was that in that old implementation, one could switch context with a thread running in a different address space, so it was possible to make a "continuation call" to another process. This was done to implement Solaris doors RPC mechanism on Linux. Because this is an RPC mechanism, arguments have to be passed, so each context switch also performed a little dance to copy arguments between address spaces.

Let's talk about one of the simplest, if not trivial, subjects in the oldest and best-established branch of mathematics: rectangle area in elementary Euclid geometry. The story contains two twists and an anecdote.

We all know that the area of a rectangle or a parallelogram is a product of its base and height, and the area of a triangle is half of that (areas of a parallelogram, a triangle and a rectangle can all be reduced to each other by a device invented by Euclid), but Euclid would not say that: the idea that measures such as lengths, areas or volumes can be multiplied is alien to him, as it still is to Galileo. There is a huge body of literature on the evolution that culminated with our modern notion of number, unifying disparate incompatible numbers and measures of the past mathematics, enough to say that before Newton-Leibniz time, ratios and fractions were not the same.

Euclid instead says (Book VI, prop. I, hereinafter quotes from the Elements are given as <Greek | English | Russian>):

<τὰ τρίγωνα καὶ τὰ παραλληλόγραμμα, τὰ ὑπὸ τὸ αὐτὸ ὕψος ὄντα πρὸς ἄλληλά ἐστιν ὡς αἱ βάσεις. | Triangles and parallelograms, which are under the same height are to one another as their bases. | Треугольники и параллелограммы под одной и той же высотой, [относятся] друг к другу как основания.>

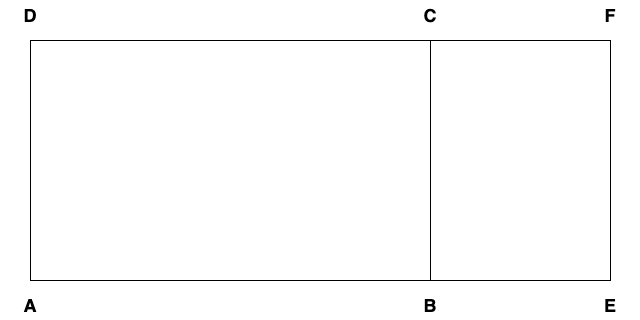

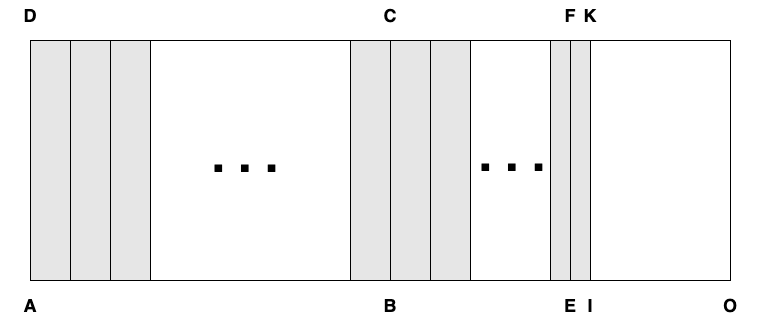

Given rectangles \(ABCD\) and \(AEFD\) with the same height \(AD\), we want to prove that the ratio of their areas is the same as of their bases: \(\Delta(ABCD)/\Delta(AEFD) = AB/AE\).

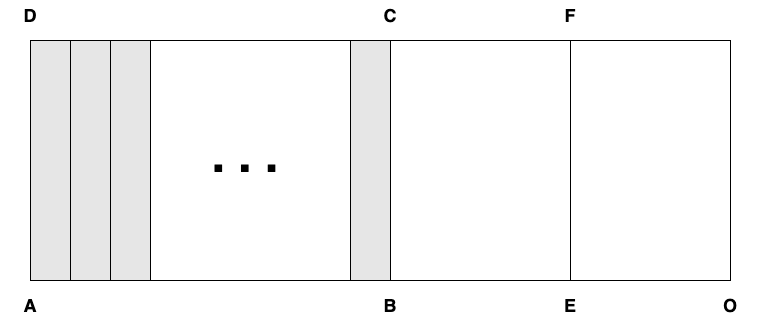

First, consider a case where the bases are commensurable, that is, as we would say \(AB/AE = n/m\) for some integers \(n\) and \(m\), or as Euclid would say, there is a length \(AX\), such that \(AB = n \cdot AX\) (that is, the interval \(AB\) is equal to \(n\) times extended interval \(AX\)) and \(AE = m \cdot AX\). Then, \(ABCD\) can be divided into \(n\) equal rectangles \(AXYD\) with the height \(AD\) the base \(AX\) and the area \(\Delta_0\), and \(AEFD\) can be divided into \(m\) of them.

Then,

$$\begin{array}{lclclcl} \Delta(ABCD) & = & \Delta(AXYD) & + & \Delta(XX'Y'Y) & + & \ldots\\ & = & n \cdot \Delta_0, & & & & \end{array}$$and

$$\begin{array}{lclclcl} \Delta(AEFD) & = & \Delta(AXYD) & + & \Delta(XX'Y'Y) & + & \ldots \\ & = & m \cdot \Delta_0 \end{array}$$so that \(\Delta(ABCD)/\Delta(AEFD) = n/m = AB/AE\), as required.

Starting from the early twentieth century, the rigorous proof of the remaining incommensurable case in a school-level exposition typically involves some form of a limit and is based on an implicit or explicit continuity axiom, usually equivalent to Cavaliery principle.

There is, however, a completely elementary, short and elegant proof, that requires no additional assumptions. This proof is used by Legendre (I don't know who is the original author) in his Elements, Éléments de géométrie. Depending on the edition, it is Proposition III in either Book III (p. 100) or Book IV (page 90, some nineteenth-century editions, especially with "additions and modifications by M. A. Blanchet", are butchered beyond recognition, be careful). The proof goes like this:

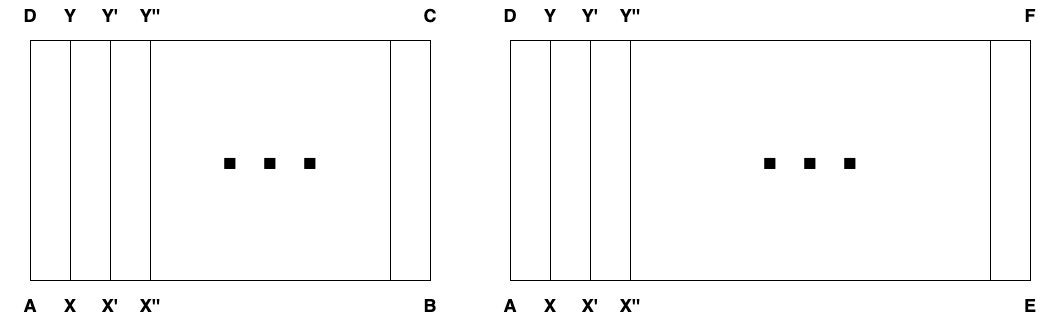

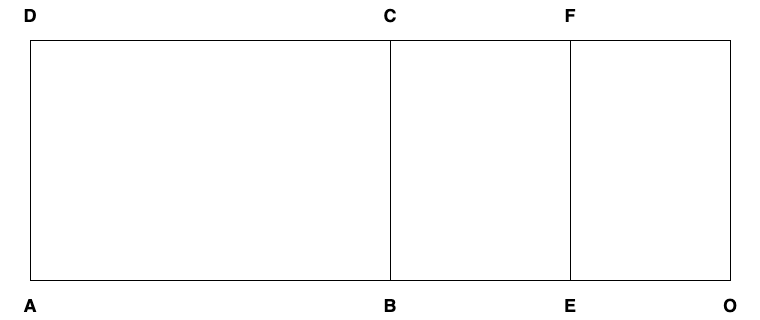

For incommensurable \(AB\) and \(AE\) consider the ratio \(\Delta(ABCD)/\Delta(AEFD)\). If \(\Delta(ABCD)/\Delta(AEFD) = AB/AE\) we are done. If \(\Delta(ABCD)/\Delta(AEFD)\) is not equal to \(AB/AE\), it is instead equal to \(AB/AO\) and either \(AE < AO\) or \(AE > AO\). Consider the first case (the other one is similar).

The points at the base are in order \(A\), then \(B\), then \(E\), then \(O\).

Divide \(AB\) into \(n\) equal intervals, each shorter that \(EO\). This requires what we now call the Archimedes-Eudoxus axiom and which is implied by Definition IV of Book V:

<λόγον ἔχειν πρὸς ἄλληλα μεγέθη λέγεται, ἃ δύναται πολλαπλασιαζόμενα ἀλλήλων ὑπερέχειν. | Magnitudes are said to have a ratio to one another which can, when multiplied, exceed one another. | Величины имеют отношение между собой, если они взятые кратно могут превзойти друг друга.>

Then continue dividing \(BE\), until we get to a point \(I\) outside of \(BE\), but within \(EO\) (because the interval is shorter than \(EO\)). The points are now in order \(A\), then \(B\), then \(E\), then \(I\), then \(O\).

\(AB\) and \(AI\) are commensurable, so \(\Delta(ABCD)/\Delta(AIKD) = AB/AI\). Also, \(\Delta(ABCD)/\Delta(AEFD) = AB/AO\), so \(\Delta(AIKD)/\Delta(AEFD) = AI/AO\). By construction \(AI < AO\), hence \(\Delta(AIKD) < \Delta(AEFD)\), but \(AEFD\) is a proper part of \(AIKD\), so \(\Delta(AEFD) < \Delta(AIKD)\). Contradiction.

Step back and look at the structure of these two proofs from the modern perspective. Fix the height and let \(\Delta(X)\) be the area of the rectangle with the base of length \(X\). By an assumption that we would call "additivity of measure" \(\Delta(X+Y) = \Delta(X) + \Delta(Y)\), that is, \(\Delta\) is an additive function. A general and easy-to-establish fact (mentioned with remarkable persistency on this blog [Unexpected isomorphism], [The Hunt for Addi(c)tive Monster]) is that any additive function is linear on rationals, that is, \(\Delta(n/m \cdot X) = n/m \cdot \Delta(X)\). This corresponds to the "commensurable" part of the proofs. To complete a proof we need linearity: \(\Delta(X) = X \cdot H\), where \(H = \Delta(1)\). But additive functions are not necessarily linear. To obtain linearity, an additional condition is needed. The traditional proof uses continuity: a continuous (at least at one point) additive function is necessarily linear.

Legendre's proof uses monotonicity: a monotonic additive function is always linear. This is clever, because monotonicity is not an additional assumption: it follows from the already assumed positivity of measure: If \(Y > X\), then \(\Delta(Y) = \Delta(X + (Y - X)) = \Delta(X) + \Delta(Y - X) > \Delta(X)\), as \(\Delta(Y - X) > 0\).

How does the original Euclid's proof look like? (He proves the triangle version, which is similar to rectangles.)

Wait... It is unbelievably short, especially given that the Elements use no notation and spell everything in words and it covers both triangles and parallelograms. It definitely has no separate "commensurable" and "imcommensurable" parts. How is this possible?

The trick is in the definition of equal ratios, Def. V of Book V:

<ἐν τῷ αὐτῷ λόγῳ μεγέθη λέγεται εἶναι πρῶτον πρὸς δεύτερον καὶ τρίτον πρὸς τέταρτον, ὅταν τὰ τοῦ πρώτου καὶ τρίτου ἰσάκις πολλαπλάσια τῶν τοῦ δευτέρου καὶ τετάρτου ἰσάκις πολλαπλασίων καθ᾽ ὁποιονοῦν πολλαπλασιασμὸν ἑκάτερον ἑκατέρου ἢ ἅμα ὑπερέχῃ ἢ ἅμα ἴσα ᾖ ἢ ἅμα ἐλλείπῃ ληφθέντα κατάλληλα.| Magnitudes are said to be in the same ratio, the first to the second and the third to the fourth, when, if any equimultiples whatever are taken of the first and third, and any equimultiples whatever of the second and fourth, the former equimultiples alike exceed, are alike equal to, or alike fall short of, the latter equimultiples respectively taken in corresponding order. | Говорят, что величины находятся в том же отношении: первая ко второй и третья к четвёртой, если равнократные первой и третьей одновременно больше, или одновременно равны, или одновременно меньше равнократных второй и четвёртой каждая каждой при какой бы то ни было кратности, если взять их в соответственном порядке>

In modern notation this means that

$$\Delta_1 / \Delta_2 = b_1 / b_2 \equiv (\forall n\in\mathbb{N}) (\forall m\in\mathbb{N}) (n\cdot\Delta_1 \gtreqqless m\cdot\Delta_2 = n\cdot b_1 \gtreqqless m\cdot b_2),$$where \(\gtreqqless\) is "FORTRAN 3-way comparison operator" (aka C++ spaceship operator):

$$X \gtreqqless Y = \begin{cases} -1, & X < Y\\ 0, & X = Y\\ +1, & X > Y \end{cases}$$This looks like a rather artificial definition of ratio equality, but with it the proof of Proposition I and many other proofs in Books V and VI, become straightforward or even forced.

The approach of selecting the definitions to streamline the proofs is characteristic of abstract twentieth-century mathematics and it is amazing to see it in full force in the earliest mathematical text we have.

I'll conclude with the promised anecdote (unfortunately, I do not remember the source). An acquaintance of Newton having met him in the Cambridge library and found, on inquiry, that Newton is reading the Elements, remarked something to the effect of "But Sir Isaac, haven't your methods superseded and obsoleted Euclid?". This is one of the two recorded cases when Newton laughed.

[0]

Let's find a sum

$$\sum_{n=1}^\infty {1\over{n(n+2)}}$$There is a well-know standard way, that I managed to recall eventually. Given that

$${1 \over n(n+2)} = {1 \over 2}\cdot \left({1\over n} - {1\over n+2}\right)$$the sum can be re-written as

$$\sum_{n=1}^\infty {1\over{n(n+2)}} = \sum_{n=1}^\infty {1 \over 2}\left({1\over n} - {1\over n+2}\right) = {1\over 2}\left({1\over 1} - {1\over 3} + {1\over 2} - {1\over 4} + {1\over 3} - {1\over 5} + {1\over 4} - {1\over 6} \cdots\right)$$with almost all terms canceling each other, leaving

$$\sum_{n=1}^\infty {1\over{n(n+2)}} = {1\over 2}\left(1 + {1\over 2}\right) = {3\over 4}$$While this is easy to check, very little help is given on understanding how to arrive to the solution in the first place. Indeed, the first (and crucial) step is a rabbit pulled sans motif out of a conjurer hat. The solution, fortunately, can be found in a more systematic fashion, by a relatively generic method. Enter generating functions.

First, introduce a function

$$f(t) = \sum_{n=1}^\infty {t^{n + 1}\over n}$$The series on the right converge absolutely when \(|t| < 1\), so one can define

$$g(t) = \int f(t) dt = \int \sum_{n=1}^\infty {t^{n + 1}\over n} = \sum_{n=1}^\infty \int {t^{n + 1}\over n} = \sum_{n=1}^\infty {t^{n + 2}\over {n(n+2)}} + C$$with the sum in question being

$$\sum_{n=1}^\infty {1\over{n(n+2)}} = g(1) - C = g(1) - g(0)$$Definition of the \(g\) function follows immediately from the form of the original sum, and there is a limited set of operations (integration, differentiation, etc.) applicable to \(g\) to produce \(f\).

The rest is more or less automatic. Note that

$$- ln(1 - t) = t + {t^2\over 2} + {t^3\over 3} + \cdots$$so that

$$f(t) = t^2 + {t^3\over 2} + {t^4\over 3} + \cdots = - t \cdot ln(1-t)$$therefore

$$g(t) = - \int t \cdot ln(1-t) dt = \cdots = {1\over 4} (1 - t)^2 - {1\over 2} (1 - t)^2 ln(1 - t) + (1 - t) ln(1 - t) + t + C$$where the integral is standard. Now,

$$g(1) - g(0) = 1 - {1\over 4} = {3\over 4}$$Voilà!

And just to check that things are not too far askew, a sub-exercise in pointless programming:

scala> (1 to 10000).map(x => 1.0/(x*(x+2))).reduceLeft(_+_)

res0: Double = 0.749900014997506PS: of course this post is an exercise in tex2img usage.

PPS: Ed. 2022: tex2img is gone, switch to mathjax.

ext3 htree code in Linux kernel implements peculiar version of balanced tree used to efficiently handle large directories.

htree directory consists of block sized nodes. Some of them (leaf nodes) contain directory entries in the same format as ext2. Other nodes contain index: they are filled with hashes and pointers to other nodes.

When new file is created in a directory, a directory entry is inserted in one of leaf nodes. When leaf node has not enough space for new entry, new node is appended to the tree, and part of directory entries is moved there. This process is known as a split. Pointer to new node is then inserted into some index node, and new node can overflow at this point, causing another split and so on.

If splits occur whole way up to the root of the tree, new root has to be added (tree grows).

It's obvious that in the worst case (extremely rare in practice) insertion of a new entry may require a new block on each tree level, plus new root, right? Now, looking at the ext3_dx_add_entry() function we see something strange:

} else {

dxtrace(printk("Creating second level index...\n"));

memcpy((char *) entries2, (char *) entries,

icount * sizeof(struct dx_entry));

dx_set_limit(entries2, dx_node_limit(dir));

/* Set up root */

dx_set_count(entries, 1);

dx_set_block(entries + 0, newblock);

((struct dx_root *) frames[0].bh->b_data)->info.indirect_levels = 1;

/* Add new access path frame */

frame = frames + 1;

frame->at = at = at - entries + entries2;

frame->entries = entries = entries2;

frame->bh = bh2;

err = ext3_journal_get_write_access(handle,

frame->bh);

if (err)

goto journal_error;

}At this moment entries points to the already existing full node and entries2 to the newly created one. As one can see, contents of entries is shifted into entries2, and entries is declared to be new root of the tree. So now tree has a root node with a single pointer to the index node that... still has not enough free space (remember entries2 got everything entries had). Omitted code that follows proceeds with splitting leaf node, assuming that its parent has enough space to insert a pointer to the new leaf. So how this is supposed to work? Or, does this work at all? That's tricky part and the curious reader is invited to try to infer what's going on without looking at the rest of this post.

The answer is simple: by ext3 htree design, capacity of the root node is smaller than that of non-root index one. This is a byproduct of binary compatibility between htree and old ext2 format: root node is always the first block in the directory and it always contains dot and dotdot directory entries. As a result, when contents of old root is copied into new node, that node ends up having enough space for two additional entries.

This is obviously one of the worst hacks and least documented at that. Shame.

Thanks to Alex Tomas for clearing this mystery for me. As he says: "Htree code is simple to understand: it only takes to tune yourself to Daniel Phillips brain-waves frequency".

"feminism means freedom, it means the right to be ... incoherent", p. 71

Let me state outright, that I won't be able to provide a critique of the cogent rational argument that forms the core of Ms. Olufemi's book, for the simple fact that even the most diligent search will not find an argument of that sort there.

I am going to prove with ample internal and external evidence, that the book does not present an articulated argument for anything. That it is little more than a haphazard collection of claims, that are not only not supported by evidence, but do not even form a consistent sequence.

All references are to the paperback 2020 edition.

Preamble

The most striking feature of the book before us is that it is difficult to find a way to approach it. A sociological study and a political pamphlet still have something in common: they have a goal. The goal is to convince the reader. The methods of convincing can be quite different, involve data and logic and authority. But in any case, something is needed. As you start reading Feminism Interrupted you are bound to find that this something is hidden very well. Indeed, as I just counted, one of the first sections Who's the boss has as many claims as it has sentences (some claims are in the form of rhetorical questions). There, as you see, was no space left for any form of supporting argumentation. Because it is not immediately obvious how to analyse a text with such a (non-)structure, let me start the same way as Ms. Olufemi starts in Introduction and just read along, jotting down notes and impressions, gradually finding our way through the book, forming a conception of the whole.

The following is a numbered list of places in the book that made me sigh or laugh. They are classified as: E (not supported by Evidence), C (Contradiction with itself or an earlier claim) and I (Incoherence). These classes are necessarily arbitrary and overlapping. I will also provide a commentary about some common themes and undercurrents running through the book.

One may object that this is too pedantic a way to review a book. Well, maybe it is, but this is the only way I know to lay ground for justifiable conclusions, with which my review will be concluded.

Notes on the text

1.E, p. 4: "neo-liberalism refers to the imposition of cultural and economic policies and practices by NGOs and governments in the last three to four decades that have resulted in the extraction and redistribution of public resources from the working class upwards" — this of course has very little to do with the definition of Neo-liberalism. Trivial as it is, this sentence introduces one of the most interesting and unexpected themes that will resurface again and again: Ms. Olufemi, a self-anointed radical feminist from the extreme left of the political spectrum, in many respects is virtually indistinguishable from her brethren on the opposite side. Insidious "NGOs" machinating together with governments against common people are the staple imagery of the far-right.

2.C, p. 5: "... that feminism has a purpose beyond just highlighting the ways women are 'discriminated' against... It taught me that feminism's task is to remedy the consequences of gendered oppression through organising... For me, 'justice work' involves reimagining the world we live in and working towards a liberated future for all... We refuse to remain silent about how our lives are limited by heterosexist, racist, capitalist patriarchy. We invest in a political education that seeks above all, to make injustice impossible to ignore." — With a characteristic ease, that we will appreciate to enjoy, Ms. Olufemi tells us that feminism is not words, and in the very next sentence, supports this by her refusal to remain silent.

3.C, p. 7: "Pop culture and mainstream narratives can democratise feminist theory, remove it from the realm of the academic and shine a light on important grassroots struggle, reminding us that feminism belongs to no one." — Right after being schooled on how the iron fist of capitalist patriarchy controls every aspect of society, we suddenly learn that the capitalist society media welcomes the revolution.

4.C, p. 8: This is the first time that Ms. Olufemi has decided to grace us with a cited source (an article from Sp!ked, 2018). The reference is in the form of a footnote, and the footnote is a 70-character URL. That is what almost all her references and footnotes look like. A particularly gorgeous URL is in footnote 3 on p. 53: it's 173 characters, of which the last 70 are unreadable gibberish. Am I supposed to retype this character-by-character on my phone? Or the references are for ornamentation only? In any case, it seems Ms. Olufemi either cannot hide her extreme contempt for the readers, or spent her life among people with an unusual amount of leisure.

5.E, p. 15: "When black feminists ... organised in the UK ... [t]hey were working towards collective improvement in material conditions... For example..." — The examples provided are: Grunwick strike by South Asian women and an Indian lady, Jayaben Desai. Right in the next sentence after that, Ms. Olufemi concludes: "There is a long history of black women ... mounting organised and strategic campaigning and lobbying efforts". Again as in 2.C, she is completely unabated by the fact that the best examples of black feminist activities that she is able to furnish, have nothing to do with black feminists.

6.E. p. 23: "Critical feminism argues that state sexism has not lessened [for the last 50 years]". Evidence: "MPs in parliament hide the very insidious ways that the state continues to enable male dominance ... " — tension rises! — "the Conservative government introduced their plans to pass a Domestic Violence Bill with the intention of increasing the number of convictions for perpetrators of abuse... " — which looks good on the surface, but of course — "it is simply another example of the way the state plays on our anxieties about women's oppression to disguise the enactment of policies that trap women in subordinate positions." — Finally, we are about to learn how exactly the governments (and NGOs, remember!) keep women subjugated for the last 50 years, we are agog with curiosity! — "Research from the Prison Reform Trust has found an increase in the number of survivors being arrested" (p. 24) — And then... And then there is nothing. How does this prove that things are not better than 50 years ago? Just follow Mr. Olufemi example, and completely expunge from your mind everything that you claimed more than five sentences and seconds ago.

7.C, p. 26. "But this figure does not tell the whole story. The impact of these cuts is felt particularly by low-income black women and women of colour." — Another constant motif of the book is that Ms. Olufemi alternately blames the state for violence and overreach, only to immediately request expansion of paternalistic services and welfare.

8.C, p. 27: "If a woman must disclose ... that she has been raped ... her dignity, agency and power over personal information is compromised." — In a sudden turn of events our feminist seems to argue that it would be preferable for rape survivors to stay silent.

9.E, p. 28: When she does provide any sort of supporting evidence, it feels better that she wouldn't: "We know that thousands of disabled people have died as a direct result of government negligence surrounding Personal Independence Payments ...\((^9)\)" — The footnote is nothing but a 100 character URL, that I patiently typed in, only to be greeted with 404. According to webarchive.org, the referred-to page never existed. Ultimately, after many false starts (whose details I shall spare you), I found the document at a completely different web-site:

https://questions-statements.parliament.uk/written-questions/detail/2018-12-19/203817

Imagine my (lack of) surprise, when it turned out that government "negligence" is neither mentioned nor in any way implied or imputed—Ms. Olufemi simply fabricated the whole story.

10.I, p.29: "[In Yarl's Wood IRC] they are locked in, unable to leave and subjected to surveillance by outsourced security guards. Tucked away in Bedford outside of the public consciousness, it's hard to think of a more potent example of state violence." — Judgment of anybody who, in the world of wars, continuous genocides and slaughter of human beings, maintains that the worst example of state violence is the sufferings of the women, who fled their ruined countries to the relative safety of the UK, must be thoroughly questioned. The second quoted sentence is also indefensible grammatically.

11.E, p. 30: In support of her claim that the state violently oppresses black women, Ms. Olufemi provides stories of 3 black women, that died in police custody over the course of... 50 years. "they reveal a pattern" — she confidently concludes. No, they don't. Statistical data would, but they do not support Ms. Olufemi's thesis. She then proceeds to lament "a dystopian nightmare for the undocumented migrants" — conveniently forgetting that these people tried as hard as they could to move to the dystopian UK and none of them hurried back. The section the quote is from is called State Killings — the 3 examples provided are somehow put in the same rubric as the doings of Pol-Pot and Mao.

12.E, p. 31: "If black women die disproportionately at the hands of the police, historically and in the present moment" — and then she proceeds on the assumption that they do, without providing any evidence. Immediately available public data (from INQUEST and IOPC reports), clearly refute the premise.

13.C, p. 32: "This refusal to participate [in capitalism] takes many forms: feminist activists are finding new and creative ways to oppose austerity." — Ms. Olufemi's personal creative way to refuse to participate in the capitalist economy is to copyright a book, publish it with a publishing corporation (a capitalist enterprise, mind you) and then collect the royalties.